Introducing DataConfig 1.2

2022-02-12We've released DataConfig 1.2 two weeks ago. It's a serialization framework for UnrealEngine 4 and 5. It's been 9 months since 1.0 and with this release DataConfig is considered feature complete. It now comes with:

- JSON and MsgPack read-write.

- Flexible and roundtrip-able serialization and deserialization for UE property system.

- Extensive documentation with DataConfig Book.

- Tons of extra samples and ~100 unit tests.

We try to cover most programming and usage topics in our documentation, however there wasn't really a chance to explain why we're developing DataConfig in the first place. We briefly documented our motivation here and there's a short video recorded on initial release.

In this post I'd like to talk more about the reasons behind DataConfig: Why an alternative serialization framework for Unreal Engine might be exciting to you.

Origins

I started dabbling into Unreal Engine a few years ago coming from a strong Unity3D background. Nowadays many developers share this experience there's even an "Unreal Engine for Unity Developers" page in official docs. One cool thing about Unity3D is the huge pool of ready-to-use plugins and assets readily available. Unity developers each have their own list of "must-have" plugins and libs that one tends to carry to every project. It's very common for us to start a project with nothing but a set of personal favorite packages and grow from there.

My #1 pick was always FullSerializer and FullInspector. They're discontinued as the author moved on to other fields, and later get superseded by Odin Inspector and Serializer. If you already know what these libraries do and are also proficient with UnrealEngine then DataConfig easy to explain: DataConfig is FullSerializer/OdinSerializer implemented for Unreal Engine. Grab a copy on Github and try it out now! Otherwise read on ;)

Prior to these people use a patched version of Json.NET that's compatible with earlier Unity3D versions. At the core these libraries all do one thing: convert between C# objects and JSON, or some other binary formats. The proper term describing these process are serialization(C# -> JSON) and deserialization(JSON -> C#).

Here's a snippet taken from Json.NET frontpage:

// Deserialize JSON in C#

string json = @"{

'Name': 'Bad Boys',

'ReleaseDate': '1995-4-7T00:00:00',

'Genres': [

'Action',

'Comedy'

]

}";

Movie m = JsonConvert.DeserializeObject<Movie>(json);

string name = m.Name;

// Bad Boys

Under the hood it's using of C# runtime reflection to figure out the structure of Movie class and access and assign each field by string. Now back in the land of C++ serialization isn't that simple since C++ doesn't have built-in runtime reflection. Classic approach can be manual markup with macro, while modern C++ magic also works (but it's hard to understand).

JSON Converter

Unreal Engine uses an alternative approach that is to mark up structs with macros then consume them in a pre-pass with a separated tool called Unreal Header Tool. It understands a subset of C++ header syntax and gets executed before actual compilation happens. This tool would generate tons of supporting code which get compiled along with the actual engine/game code. The end result is a runtime reflection system called the "Property System", which is almost feature parity with C# runtime reflection.

With the property system one can write similar deserializing code as the C# example:

// Deserialize JSON in UE Property System

USTRUCT()

struct FMovie

{

GENERATED_BODY()

UPROPERTY() FString Name;

UPROPERTY() FString ReleaseDate;

UPROPERTY() TArray<FString> Genres;

};

FString Str = TEXT(R"(

{

"Name": "Bad Boys",

"ReleaseDate": "1995-4-7T00:00:00",

"Genres": [

"Action",

"Comedy"

]

}

)");

FMovie m;

bool bOk = FJsonObjectConverter::JsonObjectStringToUStruct(Str, &m);

FString name = m.Name;

// Bad Boys

Here I want to stress that the example works in stock Unreal Engine. FJsonObjectConverter is a class in builtin module JsonUtilities.

Compared to conventional C++ deserialization it's already a huge step forward. If you look at the code huge trunk of the logic is in ConvertScalarJsonValueToFPropertyWithContainer() method. It looks at the current FProperty, which points to a UPROPERTY() marked field, and

decide how should it be converted from the JsonObject.

DataConfig takes this idea further by implementing a set of API on top of the Property System and trying to make it easier to use. As an example we have JsonObjectStringToUStruct implemented with DataConfig with the snippet below:

// DataConfig/Source/DataConfigExtra/Private/DataConfig/Extra/Types/DcJsonConverter.cpp

bool JsonObjectReaderToUStruct(FDcReader* Reader, FDcPropertyDatum Datum)

{

FDcResult Ret = [&]() -> FDcResult {

using namespace JsonConverterDetails;

LazyInitializeDeserializer();

FDcPropertyWriter Writer(Datum);

FDcDeserializeContext Ctx;

Ctx.Reader = Reader;

Ctx.Writer = &Writer;

Ctx.Deserializer = &Deserializer.GetValue();

DC_TRY(Ctx.Prepare());

DC_TRY(Deserializer->Deserialize(Ctx));

return DcOk();

}();

if (!Ret.Ok())

{

DcEnv().FlushDiags();

return false;

}

else

{

return true;

}

}

It's an almost drop-in replacement in around 20 lines long. On top of that:

-

It's backed by an alternative JSON parser that supports a relaxed super set of JSON:

- Allow C Style comments, i.e

/* block */and// line. - Allow trailing comma, i.e

[1,2,3,],. - Allow non object root. It can be a list, a string, a number or even NDJSON.

We see these as essential QOL improvements for people who manually write JSON.

- Allow C Style comments, i.e

-

We took special care to provide diagnostics for quickly tracking down errors.

Let's say we made a mistake that we forgot to close a string literal:

{ "Name": "Bad Boys", "ReleaseDate": "1995-4-7T00:00:00", "Genres": [ "Action, // <-- missing quote in here "Comedy" ] }DataConfig would fail and highlight the point of failure.

* # DataConfig Error: Unclosed string literal * - [WideCharDcJsonReader] --> <in-memory>6:15 4 | "ReleaseDate": "1995-4-7T00:00:00", 5 | "Genres": [ 6 | "Action, | ^ 7 | "Comedy" 8 | ] * - [DcPropertyWriter] Writing property: (FMovie)$root.(TArray<FString>)Genres[0]If you look closely the diagnostic also points out the property we're writing at the point of failure, which is

(FMovie)$root.(TArray<FString>)Genres[0]. These efforts are made to help you quickly track down the error and fix it fast. -

Custom deserialize logic.

In the C# example

1995-4-7T00:00:00string would be parsed into aDateTimestruct. This can be done within DataConfig. We has a uniform interface for implementing serialization logic.

Custom Serialization

Many types in JSON and Property System have a direct one-to-one mapping. For example JSON bool maps to C++ bool,

JSON string maps to UE FString, and [1,2,3] in JSON should be converted to a TArray<int> instance. However

for some data types the mapping isn't so clear, or maybe we have a very specific thing in mind:

- What should be

UObject*referenced deserialized from? - I want to read a

FDateTimefrom a timestamp string like1995-4-7T00:00:00.

DataConfig provides mechanism to customize the mapping between property system and external format. Our benchmark use case is to serialize FColor to a #RRGGBBAA string and vice versa:

// DataConfig/Source/DataConfigExtra/Public/DataConfig/Extra/Deserialize/DcSerDeColor.h

USTRUCT()

struct FDcExtraTestStructWithColor1

{

GENERATED_BODY()

UPROPERTY() FColor ColorField1;

UPROPERTY() FColor ColorField2;

};

// DataConfig/Source/DataConfigExtra/Private/DataConfig/Extra/Deserialize/DcSerDeColor.cpp

FString Str = TEXT(R"(

{

"ColorField1" : "#0000FFFF",

"ColorField2" : "#FF0000FF",

}

)");

Details are documented here. The key takeaways are:

-

It works recursively as one would expect.

This means that it works with ad-hoc

FColorfields, alsoTArray<FColor>,TMap<FString, FColor>or any arbitrary data structure that UE allows. -

Serialization and deserialization logic are separated but roundtrip-able.

Even though the serialization and deserialization process shares a lot in common, we decided we want them to be two totally separated code path in DataConfig. For example UnrealEngine

FArchiveusesSerialize(FArchive& Ar)for both saving and loading. In the context DataConfig we think there're cases that one only wants serialization or deserialization but not both. In fact DataConfig 1.0 ships with only deserializer. Serialize APIs are added in this release.Still, we take special care to make sure builtin serialization and deserialization are roundtrip-able, that is if we serialize struct

Ato JSON then deserialize it back to instanceB,AandBremain structurally equal.

We aim to provide flexible customization that allows our users to do whatever they want. Let's see some examples:

-

The

DefaultToInstancedClass Specifier means that instances of this class are considered inline objects. This allows you to attach an arbitrary derived object inside a parent object. DataConfig allows you to mark nested objects with a$typefield to explicitly select its type. This is often called polymorphism serialization.Given this toy "Object Oriented" class hierarchy:

// DataConfig/Source/DataConfigTests/Public/DcTestProperty.h UCLASS(BlueprintType, EditInlineNew, DefaultToInstanced) class UDcBaseShape : public UObject { //... UPROPERTY() FName ShapeName; }; UCLASS() class UDcShapeBox : public UDcBaseShape { //... UPROPERTY() float Height; UPROPERTY() float Width; }; UCLASS() class UDcShapeSquare : public UDcBaseShape { //... UPROPERTY() float Radius; };DataConfig would handle polymorphism as expected:

// DataConfig/Source/DataConfigTests/Private/DcTestDeserialize.cpp { "ShapeField1" : { "$type" : "DcShapeBox", "ShapeName" : "Box1", "Height" : 17.5, "Width" : 1.9375 }, "ShapeField2" : { "$type" : "DcShapeSquare", "ShapeName" : "Square1", "Radius" : 1.75, }, "ShapeField3" : null } -

UnrealEngine allows you to attach data in metadata specifiers. Here we mark

TArray<uint8>fields withDcExtraBase64so they would be deserialized from Base64 string:// DataConfig/Source/DataConfigExtra/Public/DataConfig/Extra/Deserialize/DcSerDeBase64.h USTRUCT() struct FDcExtraTestStructWithBase64 { GENERATED_BODY() UPROPERTY(meta = (DcExtraBase64)) TArray<uint8> BlobField1; UPROPERTY(meta = (DcExtraBase64)) TArray<uint8> BlobField2; }; // DataConfig/Source/DataConfigExtra/Private/DataConfig/Extra/Deserialize/DcSerDeBase64.cpp FString Str = TEXT(R"( { "BlobField1" : "dGhlc2UgYXJlIG15IHR3aXN0ZWQgd29yZHM=", "BlobField2" : "", } )"); -

In this example, we implemented a variant type

FDcAnyStructthat embeds a heap allocated struct of any type. It can also be deserialized of a JSON object with$typefield:// DataConfig/Source/DataConfigExtra/Public/DataConfig/Extra/SerDe/DcSerDeAnyStruct.h USTRUCT() struct FDcExtraTestWithAnyStruct1 { GENERATED_BODY() UPROPERTY() FDcAnyStruct AnyStructField1; UPROPERTY() FDcAnyStruct AnyStructField2; UPROPERTY() FDcAnyStruct AnyStructField3; }; // DataConfig/Source/DataConfigExtra/Private/DataConfig/Extra/SerDe/DcSerDeAnyStruct.cpp FString Str = TEXT(R"( { "AnyStructField1" : { "$type" : "DcExtraTestSimpleStruct1", "NameField" : "Foo" }, "AnyStructField2" : { "$type" : "DcExtraTestStructWithColor1", "ColorField1" : "#0000FFFF", "ColorField2" : "#FF0000FF" }, "AnyStructField3" : null } )"); -

GameplayTags is a built-in runtime module that implements hierarchical tags. We map

FGameplayTagto a string likeFoo.Barornullwhen it's empty. During deserialization we also make sure the tag exists.// DataConfig/Source/DataConfigEditorExtra/Public/DataConfig/EditorExtra/SerDe/DcSerDeGameplayTags.h USTRUCT() struct FDcEditorExtraTestStructWithGameplayTag1 { GENERATED_BODY() UPROPERTY() FGameplayTag TagField1; UPROPERTY() FGameplayTag TagField2; }; // DataConfig/Source/DataConfigEditorExtra/Private/DataConfig/EditorExtra/SerDe/DcSerDeGameplayTags.cpp FString Str = TEXT(R"( { "TagField1" : null, "TagField2" : "DataConfig.Foo.Bar" } )");

Checkout more examples in here and here.

Alternative Data Pipeline

Now that you know DataConfig comes with JSON and MsgPack support, it allows fancy serialization and deserialization. But what should you do with it? When working on the project we have a user story in mind that is to support alternative data pipeline in Unreal Engine.

If you're not familiar with the concept of data pipeline or "Data Driven Design" here's a very good post on this topic.

In short UnrealEngine supports DataTable and DataAsset that allows users to configure gameplay values within the editor. A common gripe is that UnrealEngine saves data to disk as uasset files, which are binary blobs. Binary format make meaningful diff almost impossible. It also suffers when things go wrong, notorious dilemma is "editor is crashing due to a bad file but you need the editor running to fix the file".

We favor textual formats as the fact that one can easily grep through them and peek its content with any text editor brings us peace of mind. Here we want to clarify that many successful titles ships with binary formats. It works but it takes effort and especially disciplines to manage. There's plan to support text based assets but even with that the textual files are not meant to be edited by human.

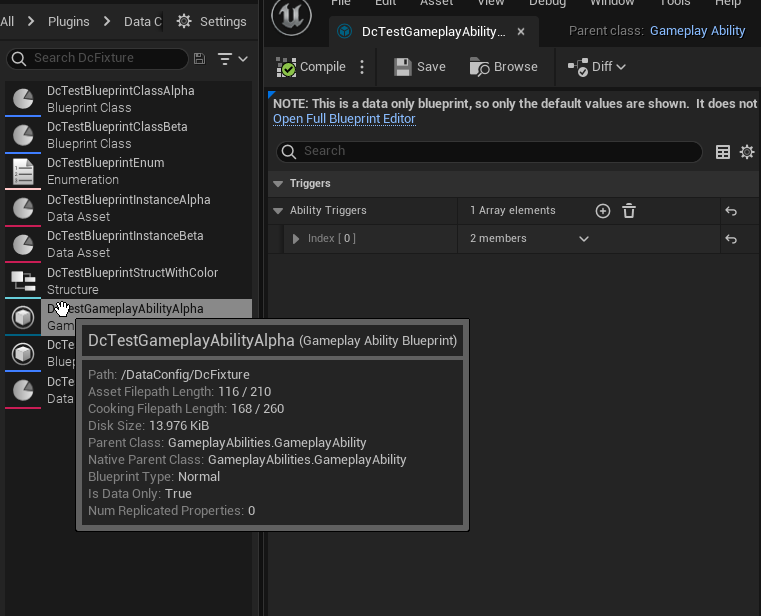

Gameplay Ability is a built-in plugin for building data driven abilities. It's canon example of how to do data driven with Unreal. You can derive some blueprint classes for custom logic then store configuration within class defaults.

We have an example that populate GameplayAbility and GameplayEffect from a JSON file. Given a JSON like this:

// DataConfig/Tests/Fixture_AbilityAlpha.json

{

/// Tags

"AbilityTags" : [

"DataConfig.Foo.Bar",

"DataConfig.Foo.Bar.Baz",

],

"CancelAbilitiesWithTag" : [

"DataConfig.Foo.Bar.Baz",

"DataConfig.Tar.Taz",

],

/// Costs

"CostGameplayEffectClass" : "/DataConfig/DcFixture/DcTestGameplayEffectAlpha",

/// Advanced

"ReplicationPolicy" : "ReplicateYes",

"InstancingPolicy" : "NonInstanced",

}

Right-click on a GameplayAbility blueprint asset and select Load From JSON, then select this file and confirm. It would correctly populate the fields with the values in JSON, as seen in the gif below:

This example demonstrates the idea of using a set of external JSON files as the authoritative data source. In this vein we have a premium plugin called DataConfig JSON Asset on the UE marketplace.

Write a data asset class that inherits UDcPrimaryImportedDataAsset, which is a common base class provided by the plugin for asset management:

UCLASS()

class UDcJsonAssetTestPrimaryDataAsset1 : public UDcPrimaryImportedDataAsset

{

//...

UPROPERTY(EditAnywhere, Category="DcJsonAsset|Tests") FName AlphaName;

UPROPERTY(EditAnywhere, Category="DcJsonAsset|Tests") bool AlphaBool;

UPROPERTY(EditAnywhere, Category="DcJsonAsset|Tests") FString AlphaStr;

//...

};

The next step is to prepare a JSON file on disk like this:

{

"$type" : "DcJsonAssetTestPrimaryDataAsset1",

"AlphaName" : "Foo",

"AlphaBool" : true,

"AlphaStr" : "Bar"

}

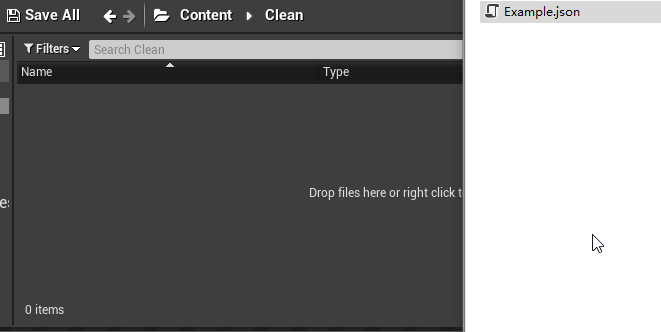

Then just drop the file into the content explorer:

The engine supports importing CSV as DataTable out of the box. DataConfig JSON Asset is following the same practices

of importing external files into the engine as uasset. By going through this standard importing procedures we get many cool features

like auto reimport on file changes. We also provide handy tools for batch import/reimport.

DataConfig JSON Asset is an example of an alternative data pipeline. Users should author and modify JSON files manually

and the plugin would parse, validate and import them into the engine. In this setup JSON files are considered authority source of truth

while the imported uasset files are considered transient: they can be wiped and recreated easily.

Now in reality it's very tempting to tweak the data assets in the editor then forgot to sync it back to JSON. The point I want to make here is that DataConfig makes alternative data pipelines relatively easy to implement. We decided to ship no tooling nor editor code with DataConfig core module as we realized that there simply isn't a one-size-fits-all option in this space. It offers is a set of tools that you'll need to spend engineering efforts to build on.

Closing notes

DataConfig is something that we really wanted and hopefully it would be helpful to you. Grab it here and give it a try! It works on UE 4.25 and onward.

Here're some random note that doesn't really fit into this post but still:

- We spend quite some time on figuring out the API and eventually settled to something similar to serde.rs especially its data model.

- DataConfig benchmark stats are here. It's around 50mb/s for both read and write. We favor other metrics other than runtime performance.